The Ancient Protein Clock That Ticks Without DNA

TL;DR: Medical AI systems are amplifying racial and economic disparities in healthcare through biased training data, proxy variables, and opaque algorithms. Solutions include transfer learning, diverse datasets, and continuous monitoring.

By 2030, AI-driven diagnostic tools will influence the care of over three billion patients worldwide. Yet beneath the promise of precision medicine lies a troubling reality: these algorithms are amplifying the very inequities they were meant to eliminate. From kidney function calculators that delayed transplants for Black patients to risk-prediction tools that systematically underfunded care for minority communities, medical AI has become an unwitting accomplice to discrimination.

The crisis isn't theoretical. It's happening right now, in hospitals and clinics across the world, as biased algorithms quietly reshape who gets care and who gets left behind.

In 2019, researchers at Berkeley uncovered a scandal that shook healthcare AI to its core. An algorithm used by hospitals nationwide to allocate resources was systematically discriminating against Black patients. The tool, designed to identify high-risk individuals who would benefit from extra care, relied on a seemingly neutral metric: healthcare costs.

The logic appeared sound. Sicker patients cost more, so predict costs and you predict need. But this assumption ignored a brutal truth about American healthcare: Black patients receive less care and generate lower costs, not because they're healthier, but because systemic barriers keep them from accessing treatment in the first place.

The algorithm interpreted this inequality as evidence of lower need. At identical risk scores, Black patients were significantly sicker than white patients, requiring correction of the bias to increase Black patient enrollment in high-risk programs by 47%. The tool had weaponized historical injustice, coding discrimination directly into healthcare delivery.

This wasn't an isolated incident. It was a warning shot revealing how deeply bias can penetrate systems we trust to be objective.

Machine learning models don't develop prejudice on their own. They learn it from us, through three interconnected pathways that convert human inequity into algorithmic discrimination.

The Data Problem

Training data reflects the world as it is, not as it should be. When AI models learn from historical medical records, they inherit decades of disparate treatment patterns. A 2025 study on COVID-19 mortality predictions revealed the stark mathematics of underrepresentation: out of 365,608 cases, Non-Hispanic White patients comprised 69% while American Indian patients numbered just 651.

For minority groups, this translates to algorithms trained predominantly on white patient data, then deployed across diverse populations. The result? Decision Tree models achieved precision of only 0.38 for Hispanic patients before correction, meaning six out of ten high-risk predictions were wrong.

According to the World Health Organization, social determinants like education, employment, and income account for up to 55% of health outcomes. When these variables enter AI models as mere correlations, algorithms can mistake poverty for destiny, encoding structural inequality as medical fact.

The Proxy Problem

Sometimes bias hides in plain sight, masquerading as neutral information. Consider zip code, a seemingly innocent geographic marker. In reality, it's a powerful proxy for race, income, and access to care, carrying all the weight of residential segregation patterns into algorithmic decisions.

For years, kidney function equations included race as a variable, systematically overestimating kidney function in Black patients. This seemingly small adjustment had profound consequences: delayed diagnoses, postponed transplant eligibility, and worse outcomes for thousands of patients. The National Kidney Foundation and American Society of Nephrology eventually removed race from these calculations, but the damage had already been done.

The Transparency Problem

Modern AI systems, particularly deep learning models, operate as black boxes. Even their creators can't always explain why a model makes specific predictions. This opacity creates a double bind: clinicians can't audit decisions for bias, and patients can't challenge recommendations they don't understand.

When doctors override AI advice more frequently for certain demographic groups, it introduces another layer of bias, one rooted in human interpretation rather than algorithmic logic. The system becomes biased not just in what it recommends, but in how those recommendations get applied.

Behind every biased prediction is a person whose care was compromised. The statistics are damning, but they only hint at the individual tragedies playing out daily in healthcare systems worldwide.

Consider the resource allocation algorithm that denied care to Black patients. Those affected didn't receive extra monitoring that might have caught complications early. They missed preventive interventions that could have avoided hospitalizations. Some likely died from conditions that more intensive management might have controlled.

IBM Watson for Oncology, once heralded as a breakthrough in cancer treatment, faced criticism for recommendations that didn't account for patient diversity. When AI trained predominantly on data from one population gets deployed globally, it can suggest treatments proven effective in clinical trials that excluded patients who look like you.

The Michigan research team that developed methods to account for bias in medical data found that without intervention, AI systems don't just reflect existing disparities, they amplify them. Each iteration of the algorithm, each update based on biased outcomes, can deepen the divide between who receives excellent care and who falls through the cracks.

For vulnerable populations already facing barriers, algorithmic bias compounds the problem. Low-income patients may be flagged as higher financial risks and denied insurance coverage. Racial minorities might be systematically undertreated by algorithms that learned from data reflecting historical discrimination. The very efficiency that makes AI attractive, its ability to process millions of cases instantly, means these mistakes happen at unprecedented scale and speed.

Recognition of the problem has spurred a wave of innovation in bias detection and mitigation. Researchers and developers are pioneering approaches that could transform AI from a source of inequity into a tool for justice.

Technical Solutions

Transfer learning emerged as a powerful technique for improving model performance across demographic groups. By fine-tuning algorithms on data from underrepresented populations, researchers boosted Decision Tree precision for Hispanic patients from 0.38 to 0.53, and for Asian patients from 0.47 to 0.61. The approach works by teaching models that learned from majority populations to recognize patterns specific to minority groups.

However, transfer learning has limits. When sample sizes become extremely small, as with the 651 American Indian patients in the COVID study, performance can actually worsen. For the tiniest minority groups, Gradient Boosting Machine precision dropped from 0.46 to 0.24 after fine-tuning, suggesting that some populations remain too underrepresented for current techniques to help.

IBM's AI Fairness 360 provides an open-source toolkit with over 70 fairness metrics and 10 bias mitigation algorithms. Developers can test models for disparate impact across demographics, then apply preprocessing, in-processing, or post-processing techniques to improve equity. Similar tools like Fairness Indicators and What-If Tool democratize bias detection, making it accessible to teams without specialized expertise.

Data Strategies

The most fundamental fix is improving the data itself. Inclusive data collection efforts deliberately oversample minority populations, ensuring algorithms have sufficient examples to learn from. When real-world data remains inadequate, synthetic data generation can fill gaps by creating artificial records that preserve privacy while increasing representation.

Healthcare organizations are establishing standards for dataset diversity, requiring that training data reflect the full spectrum of patients who will encounter the algorithm. This means not just racial and ethnic diversity, but also variation in age, socioeconomic status, geographic location, and underlying health conditions.

Continuous Monitoring

Post-deployment monitoring tracks performance across demographic groups over time, catching bias that emerges as populations or practices change. The FDA now requires this for AI-enabled medical devices, mandating that developers demonstrate ongoing fairness.

Without vigilant oversight, algorithms can drift silently toward discrimination. A model trained on data from five years ago might make systematically worse predictions today because disease patterns shifted, treatment protocols evolved, or patient populations changed. Regular audits using explicit bias thresholds catch these problems before they cause harm.

Policymakers are scrambling to catch up with the rapid deployment of AI in healthcare, crafting frameworks that balance innovation with protection.

The FDA's guidance for AI/ML medical devices mandates transparency, clinical validation across diverse populations, and post-market surveillance. Developers must submit predetermined change-control plans describing how they'll update algorithms while maintaining safety and fairness. This lifecycle approach recognizes that medical AI isn't a one-time product but an evolving system requiring continuous governance.

The Health AI Partnership brings together technology companies, healthcare providers, patient advocates, and researchers to develop consensus standards. Their work influences both voluntary industry practices and regulatory requirements, creating a bridge between technical possibility and ethical necessity.

At the state level, some jurisdictions now require algorithm impact assessments before deploying AI in insurance underwriting and resource allocation. These evaluations force organizations to demonstrate that their systems don't discriminate, shifting the burden of proof from harmed patients to technology developers.

International efforts like ISO/IEC standards for AI establish global baselines for fairness metrics, documentation practices, and testing protocols. While adoption remains voluntary in many contexts, these frameworks provide actionable guidance for organizations committed to responsible AI development.

Even perfectly fair algorithms can produce inequitable outcomes if deployed in biased contexts. Technical solutions must be paired with systemic reforms addressing the root causes of health disparities.

Clinician Training

Doctors and nurses need AI literacy training that goes beyond learning to use specific tools. Clinicians must understand how algorithms work, what biases they might harbor, and when to trust or question their recommendations. This educational imperative is especially urgent because provider behavior, whether deferring to AI for some patients but overriding it for others, can introduce bias even when the underlying model is fair.

Medical schools are beginning to integrate data science and algorithm ethics into curricula. Future doctors will graduate with knowledge of fairness metrics, understanding of how social determinants get encoded in models, and skills to critically evaluate AI-generated recommendations.

Community Engagement

The Equitable AI Research Roundtable exemplifies a growing movement toward community-centered AI development. By bringing together legal experts, educators, social justice advocates, and technologists with affected communities, these collaborations ensure that bias mitigation reflects the priorities of those most at risk of harm.

Patient advisory boards review algorithms before deployment, identifying potential concerns that engineers might miss. Community health workers provide ground-level insights about how AI tools perform in real-world settings. This participatory approach transforms development from a top-down technical exercise into a collective effort to advance health equity.

Policy Reform

Technology alone can't fix structural racism in healthcare. Algorithmic fairness initiatives must accompany broader reforms: expanding insurance coverage, addressing social determinants of health, recruiting diverse medical professionals, and dismantling institutional practices that perpetuate disparities.

The Brookings Institution argues that responsible AI development requires addressing the social context in which algorithms operate. An algorithm that fairly allocates limited resources doesn't eliminate the problem of resources being limited in the first place. Equity demands not just fair distribution of inadequate care, but ensuring everyone has access to excellent care.

We stand at a crossroads. One path leads to a healthcare system where algorithmic bias entrenches inequality, automating discrimination at scale. The other path leads to AI that actively reduces disparities, learning from the full diversity of human experience and extending quality care to underserved populations.

Recent research offers glimpses of this better future. Studies published in JAMA Network Open demonstrate that carefully designed AI can identify at-risk patients who would otherwise fall through the cracks, targeting interventions to those who need them most regardless of race or income. Emerging techniques in fairness-aware machine learning show how to build equity into models from the ground up rather than attempting to fix bias after the fact.

The technology exists to create fair algorithms. What remains uncertain is whether we'll muster the collective will to deploy them. This requires sustained investment in diverse data collection, rigorous testing for bias, transparent reporting of failures, and accountability when algorithms cause harm.

For healthcare professionals, the path forward means treating AI recommendations with appropriate skepticism, especially for patients from underrepresented groups. For developers, it means embedding fairness into every stage of the development lifecycle and prioritizing equity alongside accuracy. For policymakers, it means crafting regulations with teeth, holding organizations accountable when their algorithms discriminate.

The stakes couldn't be higher. Medical AI will shape healthcare for generations to come. Whether it becomes a tool for justice or an engine of inequity depends on choices we make right now. The algorithms are watching us, learning from our decisions, and amplifying our values, both noble and ignoble. We have a brief window to teach them well.

The question isn't whether AI will transform healthcare. It already has. The question is who that transformation will serve.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

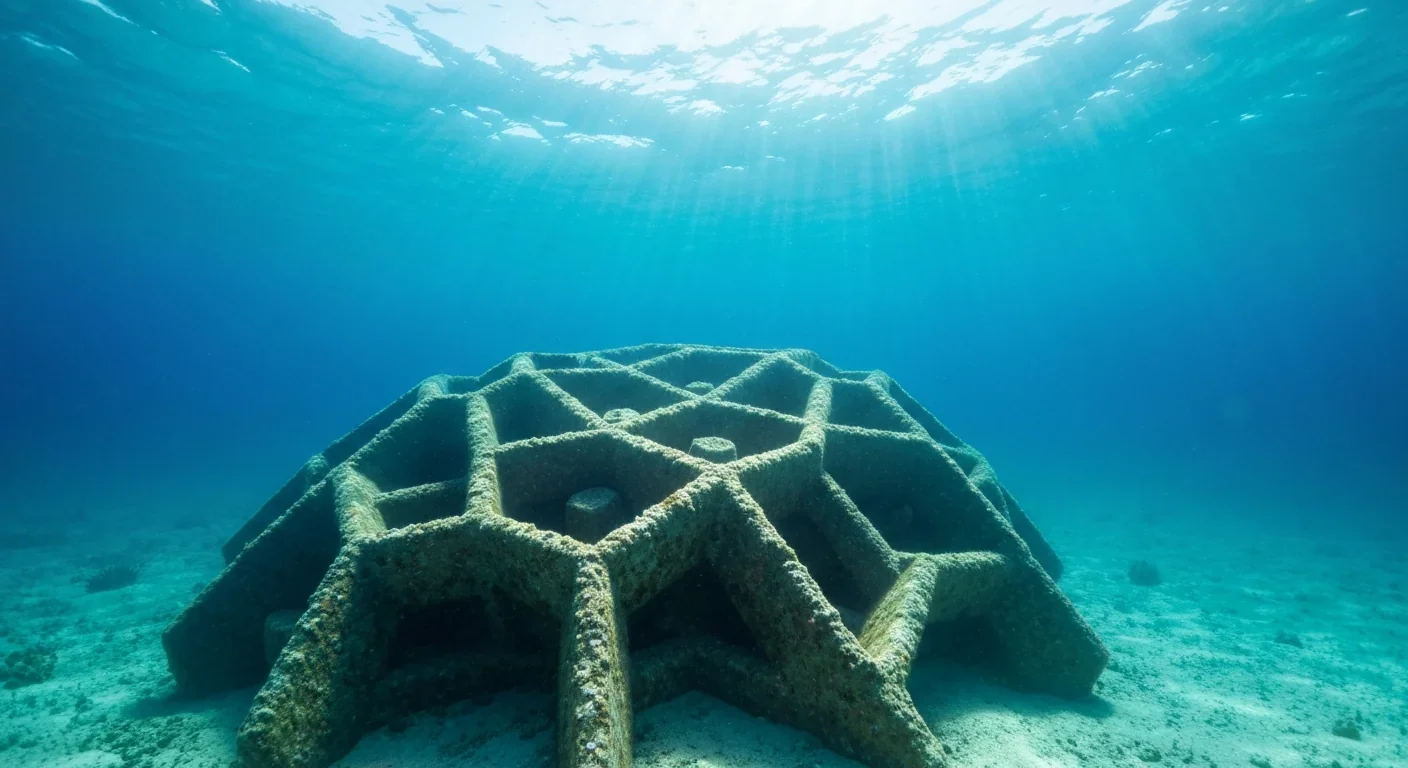

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

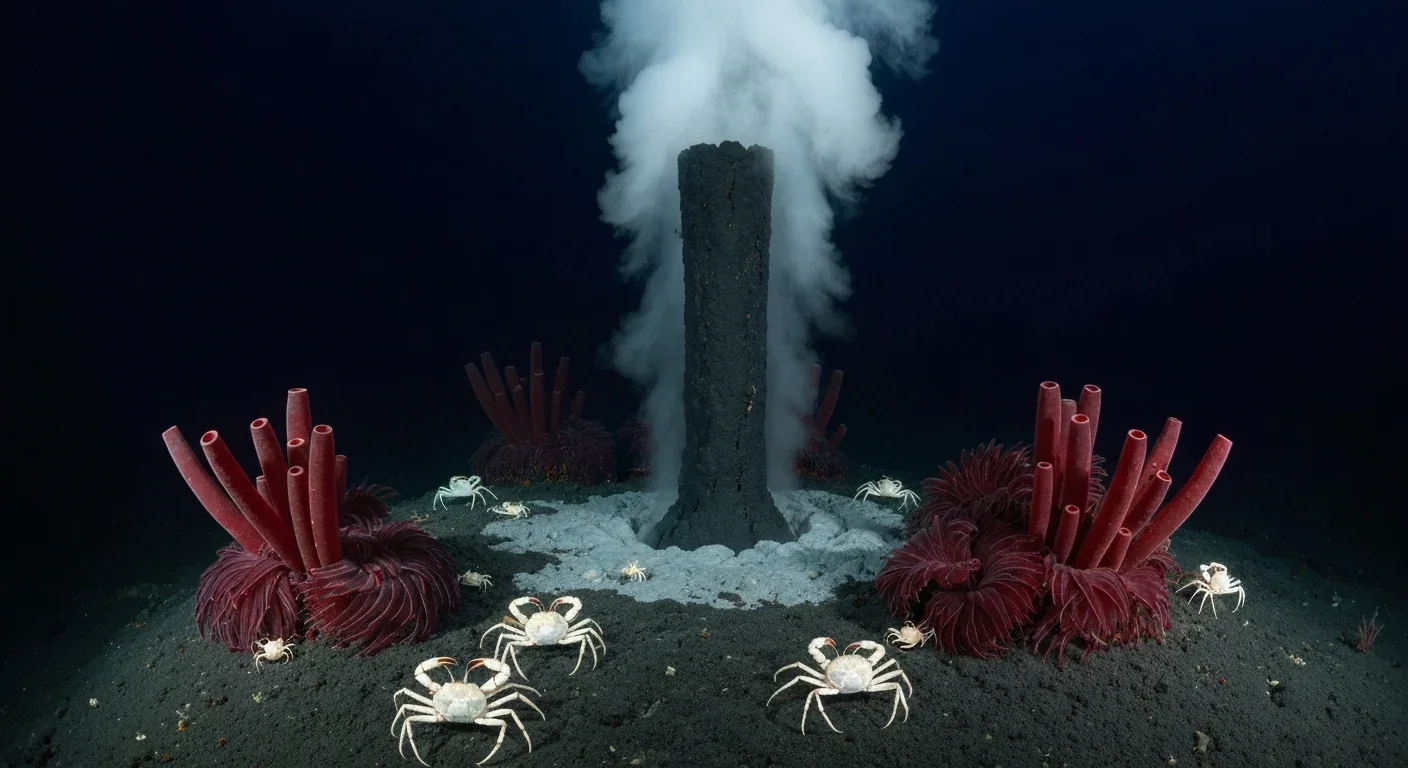

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

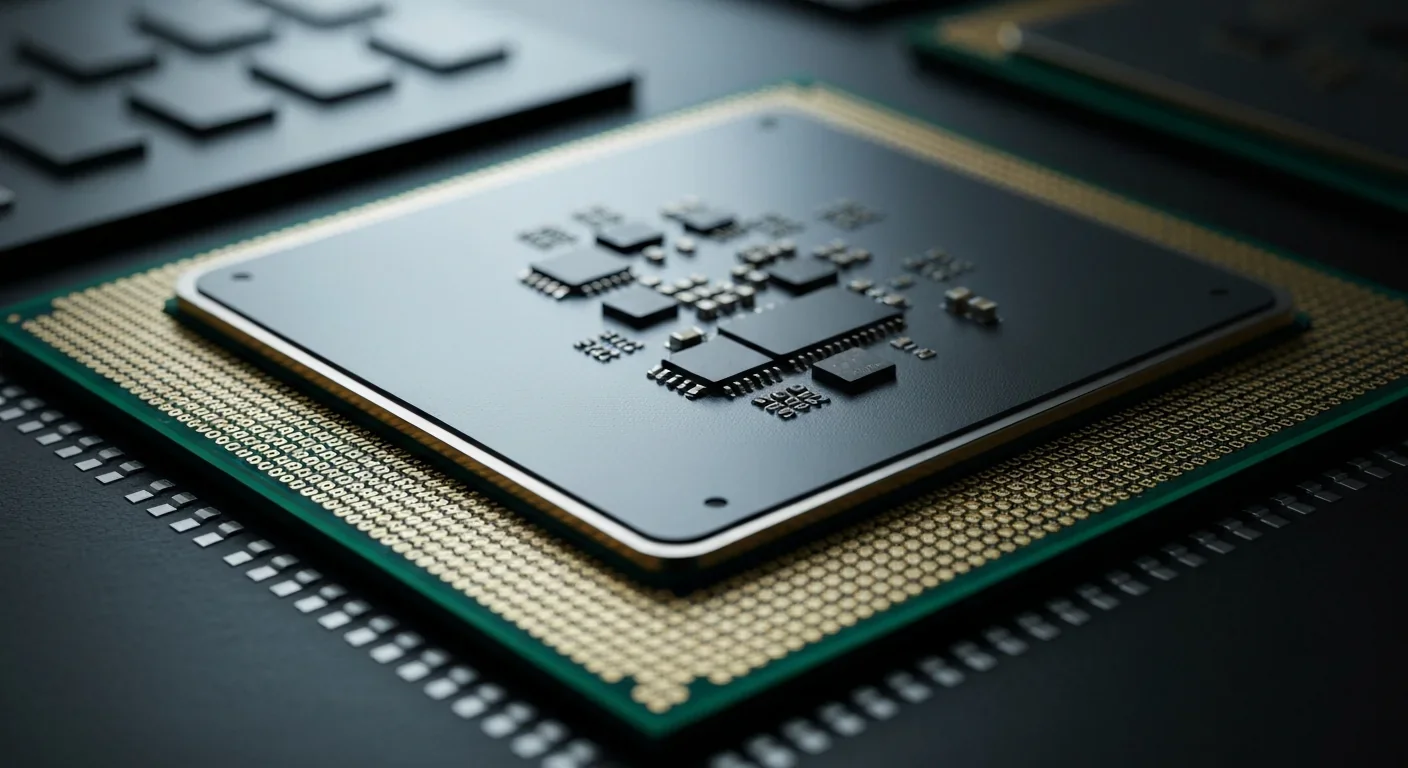

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.