Antibacterial Soap May Be Destroying Your Gut Health

TL;DR: Explainable AI (XAI) is breaking down the black-box barrier in medical diagnostics, giving doctors visual explanations of how algorithms reach decisions through attention maps, SHAP scores, and counterfactual scenarios. While FDA regulations now mandate transparency in medical AI, real-world implementations show XAI improves both trust and diagnostic accuracy by transforming algorithms from mysterious oracles into collaborative partners that show their work.

A radiologist stares at a chest X-ray flagged by an AI system. "Cancer detected," the algorithm announces with 94% confidence. But where? Why? The doctor sees nothing alarming in the usual places. Without understanding the AI's reasoning, she faces an impossible choice: trust the black box or trust her years of training. This moment of algorithmic opacity plays out thousands of times daily across hospitals worldwide, and it's killing the potential of AI to transform medicine.

The problem isn't that AI can't diagnose disease - it's that nobody knows how. Explainable AI (XAI) technologies are finally changing that, transforming inscrutable algorithms into transparent collaborators that show their work. The shift from black-box to glass-box AI could determine whether artificial intelligence becomes medicine's most powerful tool or its most dangerous liability.

The numbers should have sold physicians on AI years ago. Deep learning models now match or exceed human performance in detecting diabetic retinopathy, identifying skin cancer, and spotting lung nodules on CT scans. Some systems analyze pathology slides faster than entire teams of specialists. Yet adoption remains glacial, and the reason is brutally simple: doctors don't trust what they can't understand.

A randomized survey experiment of American patients revealed the depth of this trust crisis. When participants learned their doctor used AI to assist diagnosis, trust scores plummeted from 0.50 to 0.30 on a standardized scale. The more extensively AI was used, the lower the trust - even when the AI improved accuracy. The message was clear: people want human judgment, or at least the illusion of it.

When doctors learn their diagnostic tool uses AI, trust scores drop by 40% - even when the AI improves accuracy. The crisis isn't performance; it's transparency.

Clinicians share this skepticism, but for more sophisticated reasons. A mixed-methods study of Polish physicians found that while 70% recognized AI's potential benefits, fewer than 40% expressed willingness to integrate it into clinical workflows. The barrier wasn't technological literacy - it was accountability. Who's responsible when an opaque algorithm misdiagnoses a patient? The doctor who followed its recommendation? The hospital that deployed it? The company that built it?

This isn't mere technophobia. Medical decision-making requires justification. Doctors must explain their reasoning to patients, defend their choices in peer review, and testify in malpractice cases. "The computer said so" doesn't hold up in court, as legal experts increasingly warn. Black-box AI creates a liability nightmare where no one can explain why a treatment was chosen or a diagnosis made.

Traditional machine learning models offered some interpretability. Logistic regression showed which factors increased disease risk. Decision trees mapped clinical pathways in ways doctors could trace. But modern deep learning traded transparency for performance. Neural networks with millions of parameters learned patterns too complex for human comprehension. The result was diagnostic accuracy that came with an unacceptable cost: mystery.

Explainable AI doesn't make neural networks simple - it makes their decisions visible. Instead of just producing a diagnosis, XAI systems highlight which parts of an image, which data points, or which patient characteristics drove the conclusion. Think of it as showing the AI's work, the way math teachers demanded you reveal your calculations even when the answer was correct.

The most widely deployed XAI technique in medical imaging is attention mapping. These systems generate heatmaps overlaying medical images, with bright colors indicating regions the AI weighted heavily in its decision. When an algorithm flags a suspicious lung nodule, the attention map shows exactly which pixels triggered the alert - the calcification pattern, the irregular borders, the surrounding tissue changes that suggest malignancy.

Saliency visualization takes this further by ranking the importance of every image feature. Rather than a simple heatmap, it creates a gradient showing which elements most influenced the model. A comprehensive review in Sensors journal describes how saliency maps help radiologists understand not just where the AI looked, but what it saw - the subtle texture changes invisible to human eyes but telltale to trained algorithms.

"Saliency maps help radiologists understand not just where the AI looked, but what it saw - the subtle texture changes invisible to human eyes but telltale to trained algorithms."

- Sensors Journal Research Review

For non-imaging diagnostics, SHAP (SHapley Additive exPlanations) has become the gold standard. This technique assigns each input variable a score representing its contribution to the prediction. When an AI predicts heart disease risk, SHAP might reveal that elevated troponin levels contributed 35% to the decision, family history 20%, blood pressure 15%, and so forth. Research on kidney condition classification demonstrated how combining SHAP with attention maps creates multi-layered explanations doctors can actually use.

Counterfactual explanations answer a different question: what would need to change for the AI to predict differently? "If the patient's A1C were 6.5% instead of 8.2%, the model would not flag diabetes risk." These what-if scenarios help clinicians understand decision boundaries and identify which interventions might alter outcomes. They transform AI from a diagnostic oracle into a planning tool.

The technical challenge is making these explanations both accurate and useful. Early XAI methods sometimes highlighted plausible but incorrect features - the AI appeared to focus on relevant anatomy when it actually keyed on imaging artifacts or metadata. Advanced evaluation frameworks now test whether explanations truly reflect model behavior or just create comforting illusions of transparency.

At Southampton University Hospital and Airedale NHS Trust in the UK, researchers deployed an explainable AI system for fetal growth scans that does something remarkable: it shows sonographers not just measurements, but where the algorithm placed anatomical landmarks and why. The system overlays its reasoning directly onto ultrasound images, marking the skull diameter, abdominal circumference, and femur length it used for calculations.

The validation study spanned three institutions and involved end-users at every level - sonographers, radiologists, obstetricians, and midwives. Results were striking: 89% of clinicians found the explanations improved their confidence in AI-assisted measurements. More importantly, when the AI and human operators disagreed, the visual explanations helped identify which party had erred. Sometimes the AI caught subtle anatomical variations humans missed. Other times, clinicians recognized that the AI had misidentified landmarks because of unusual fetal positioning. The transparency turned potential conflicts into teaching moments.

In oncology, XAI is transforming tumor detection from MRI and CT scans. At several European cancer centers, radiologists now use systems that not only flag suspicious lesions but generate detailed visual explanations showing texture patterns, edge characteristics, and tissue density variations that triggered alerts. One radiologist described the experience as "like having a colleague point out what they're seeing, instead of just saying 'I think it's cancer.'"

89% of clinicians found XAI explanations improved their confidence in AI-assisted measurements. Transparency turned potential conflicts into teaching moments.

The benefits extend beyond trust. Studies show that explainable AI actually improves diagnostic performance when clinicians disagree with initial AI assessments. With black-box systems, doctors often defer to the algorithm even when their instincts say otherwise - they assume the machine knows something they don't. With transparent AI, physicians can evaluate whether the algorithm's reasoning makes medical sense. This critical thinking leads to better outcomes than blindly accepting or rejecting AI recommendations.

Clinical decision support systems are also embracing XAI. At major academic medical centers, AI tools for treatment recommendations now show which clinical guidelines, patient characteristics, and research evidence informed their suggestions. When an AI recommends a specific chemotherapy regimen, it displays the similar patient cohorts, survival outcomes, and contraindications it considered - turning an algorithmic output into a literature review personalized to the patient at hand.

Perhaps most promisingly, counterfactual explanations are being used to personalize depression medication selection. Rather than just suggesting an antidepressant, these systems show patients and psychiatrists how treatment outcomes might differ based on dose adjustments, combination therapies, or alternative medications. The AI essentially runs simulations: "Based on patients with similar profiles, switching from an SSRI to an SNRI increased remission rates by 23%." This transforms AI from a prescription generator into a decision-support partner.

While clinicians debated XAI's merits, regulators made the decision for them. In 2024, the FDA issued comprehensive guidance on AI-enabled medical devices that made transparency not optional but mandatory. The guidance requires manufacturers to document how AI models reach decisions, what data trained them, and how they handle edge cases.

The FDA's predetermined change control plans add another layer of accountability. Because AI models learn and evolve, the FDA now requires companies to specify in advance how algorithms will be updated, what performance metrics will trigger changes, and how these modifications will be validated. It's a regulatory framework built on the assumption that explainability is not a feature but a requirement.

The guidance specifically addresses the "black box" problem. Medical device AI must provide "appropriate transparency" about its operations, limitations, and reasoning - vague language that essentially mandates some form of XAI. Manufacturers can't just demonstrate that their algorithm works; they must explain how it works to a degree that clinicians and regulators can evaluate whether the approach is medically sound.

"Medical device AI must provide appropriate transparency about its operations, limitations, and reasoning. Manufacturers can't just show their algorithm works - they must explain how it works."

- FDA Guidance on AI-Enabled Medical Devices

European regulators went further. The EU Medical Device Regulation classifies high-risk AI systems - including most diagnostic tools - as requiring extensive documentation of decision-making processes. International regulatory harmonization efforts are pushing similar standards globally, creating a de facto requirement that medical AI be explainable or remain unapproved.

The liability landscape reinforces these requirements. Legal experts analyzing algorithmic malpractice scenarios conclude that physicians who use opaque AI face heightened liability risk. Courts expect doctors to exercise independent judgment. If an AI system provides no explanation, judges and juries may view the physician's reliance on it as abdicating professional responsibility.

Conversely, explainable AI could become a legal shield. If a doctor can demonstrate they reviewed the AI's reasoning, evaluated its logic against clinical evidence, and made an informed decision incorporating the AI's input, they're on much stronger legal ground than if they simply followed a black-box recommendation. XAI transforms AI from a liability into evidence of thorough, technology-informed decision-making.

Here's the uncomfortable truth XAI researchers rarely advertise: making AI explainable sometimes makes it less accurate. The most interpretable models - decision trees, linear regression, rule-based systems - often perform worse than deep neural networks on complex medical tasks. Comprehensive reviews of XAI in medical imaging acknowledge this tension between transparency and performance.

The tradeoff stems from how neural networks learn. Their power comes from discovering intricate, high-dimensional patterns that no human-designed rule set could capture. When you force a model to be interpretable - to make decisions based on features humans can understand - you constrain it to human-comprehensible patterns. Sometimes the most accurate approach is one we can't fully understand.

Researchers are attacking this problem from both ends. One approach: build inherently interpretable models that rival black-box performance. Recent work on interpretable machine learning in healthcare shows promise with "hybrid" architectures that use deep learning for feature extraction but interpretable models for final decisions. The neural network handles the complex pattern recognition, then hands off to a transparent decision layer doctors can audit.

The other approach: accept some opacity in exchange for rigorous post-hoc explanation. Rather than constraining the model during training, generate the best possible explanations after the fact. The risk is that these explanations might be plausible but inaccurate - the AI appears to reason one way while actually operating differently. Advanced evaluation frameworks now test "explanation faithfulness" to ensure XAI methods truly reflect model behavior.

Different medical contexts demand different balances. For screening tests meant to catch potential cases for human review, slightly lower accuracy might be acceptable if explainability helps doctors triage flagged cases. For emergency diagnostics where speed and accuracy are paramount, less interpretable but more accurate models might be preferable, with explanations providing confidence rather than primary decision support.

Some researchers question whether the tradeoff is even necessary. A 2025 review in Archives argues that perceived performance penalties from interpretability requirements often reflect poor model design rather than fundamental limitations. Well-architected XAI systems can approach black-box performance while maintaining transparency - it just requires more sophisticated engineering.

The debate matters because it shapes how we think about AI's role in medicine. If we must choose between accuracy and interpretability, we're deciding whether AI should be an autonomous decision-maker we trust blindly or a collaborative tool we interrogate constantly. XAI's promise is that we won't have to choose - that transparency and performance can coexist.

The psychology of trust in AI doesn't work how technologists assumed. Accuracy alone doesn't convince skeptics. A system that's right 95% of the time but won't explain its reasoning often loses to a 90% accurate system that shows its work. Research on thoughtful AI implementation found that transparency affects trust more powerfully than performance metrics.

A 95% accurate system that won't explain its reasoning often loses trust to a 90% accurate system that shows its work. In medicine, transparency matters more than perfection.

Part of this is human nature - we're wired to trust things we understand over things that merely work. But in medicine, there's a deeper dynamic at play. Doctors aren't just users of diagnostic tools; they're accountable for outcomes. When a treatment goes wrong, "the algorithm recommended it" is not an acceptable defense. Physicians need to understand AI recommendations deeply enough to take ownership of them.

Studies of clinician-AI collaboration reveal an interesting pattern: the most successful implementations don't aim to replace human judgment but to make it more informed. Radiologists who use XAI-enhanced tools describe them as "smart second readers" rather than oracles. The AI catches things they might miss; they catch things the AI misinterprets. The partnership works because both parties' reasoning is visible.

This collaborative model addresses a problem that stymied earlier AI adoption: the competence-trust paradox. Less experienced clinicians needed AI assistance most but were least equipped to evaluate its recommendations. Experts could spot when AI went wrong but least needed the help. XAI shifts this dynamic by making AI reasoning pedagogical. Junior doctors learn from watching how the AI analyzes cases, while experts can audit whether the AI's approach aligns with best practices.

Patient trust follows a similar pattern. Randomized experiments on AI consultation found that patients initially reacted negatively to AI-assisted diagnosis - until they understood how it worked. When AI's role was framed as "helping your doctor analyze more data than humanly possible" rather than "replacing human judgment," acceptance rates increased dramatically. Transparency transformed AI from a threat into a superpower.

The National Academy of Medicine's comprehensive analysis of AI adoption barriers concludes that the technical challenge isn't building accurate AI - it's building AI that healthcare systems can trust. That requires not just explainability but validation that explanations reflect reality, education so clinicians can interpret explanations correctly, and cultural change so transparency becomes an expectation rather than a feature.

The explainable AI revolution is quietly rewriting medicine's relationship with technology. Within five years, opaque diagnostic AI will likely be unmarketable in major healthcare markets. Regulators have spoken; clinicians have drawn their line. The next generation of medical AI will be transparent by design, not apology.

This shift will accelerate AI adoption rather than slow it. Healthcare policy experts project that clear regulatory frameworks for explainable AI will reduce deployment uncertainty, making healthcare systems more willing to invest in these technologies. When hospitals know what regulators expect and can demonstrate compliance through XAI documentation, the business case for AI implementation strengthens.

We're likely to see specialization in XAI approaches. Radiology will converge on attention mapping and saliency visualization - techniques that overlay explanations onto the images clinicians already scrutinize. Clinical decision support will favor SHAP and counterfactual explanations - methods that translate statistical relationships into clinical reasoning. Pathology might embrace interactive XAI where users can ask the AI "why" questions and receive increasingly detailed explanations.

The technology is also creating new roles. "AI interpreters" - clinicians trained specifically in understanding and communicating AI reasoning - are emerging at leading medical centers. These specialists help bridge the gap between data scientists who build models and frontline clinicians who use them. As healthcare organizations navigate AI integration challenges, this translator role may become standard.

Longer-term, XAI could transform medical education itself. Imagine medical students learning from AI systems that don't just diagnose but explain their differential diagnosis process, highlighting which symptoms they weighted heavily and why. Clinical training frameworks incorporating XAI essentially create infinite case studies with expert-level reasoning exposed - a pedagogical resource that's never existed before.

The most profound change may be philosophical. For centuries, medicine combined art and science - systematic knowledge mixed with intuition born of experience. AI threatened to reduce everything to algorithms, eliminating the art. XAI suggests a different future: one where technology augments intuition rather than replacing it, where machines and humans reason together rather than in isolation, and where transparency ensures that the march of progress doesn't leave human judgment behind.

XAI has limitations researchers are only beginning to confront. Current explanation techniques work well for "what" questions - what patterns did the AI detect, what factors influenced its decision. They're much worse at "why" questions - why those patterns matter, why certain factors should be weighted heavily. A heatmap showing the AI focused on a specific region tells you nothing about whether that focus reflects valid medical reasoning or spurious correlation.

There's also the problem of explanation diversity. Different XAI methods applied to the same model sometimes generate contradictory explanations. SHAP might identify blood pressure as the most important variable while LIME emphasizes heart rate. Research on XAI evaluation is developing methods to test which explanations are most faithful to actual model behavior, but consensus standards remain elusive.

The "illusion of understanding" poses another risk. Plausible-sounding explanations can create false confidence in flawed models. If an AI highlights a lung nodule and a doctor thinks "yes, that makes sense," but the AI actually keyed on an irrelevant imaging artifact, the explanation has made things worse by validating an error. Robust XAI requires not just generating explanations but verifying they're correct - a technically demanding challenge.

Perhaps most troubling: XAI might not actually change outcomes if cultural and workflow barriers persist. Implementation research shows that even when clinicians have access to excellent XAI tools, time pressure, alert fatigue, and institutional resistance can prevent meaningful engagement with AI explanations. Transparency only builds trust if people have the time and incentive to examine it.

The regulatory framework also remains incomplete. While the FDA demands transparency, it hasn't specified exactly how much or what kind. This ambiguity leaves manufacturers guessing about what will satisfy reviewers - a recipe for either over-engineering expensive explanations or under-delivering and facing rejection. Clearer standards would help, but defining "sufficiently explainable" across diverse medical applications may be impossible.

The explainable AI movement reflects something deeper than a technical fix for black-box algorithms. It's a negotiation between human and machine intelligence about the terms of their collaboration. Medicine has always valued both empirical evidence and clinical judgment, data and experience, protocol and intuition. AI's arrival forced a reckoning: would algorithms replace judgment or inform it?

XAI is medicine's answer: neither replacement nor mere assistance, but partnership. The algorithms bring pattern-recognition capabilities no human can match - the ability to simultaneously consider thousands of data points, to learn from millions of cases, to spot subtle signals buried in noise. Humans bring context, skepticism, the ability to recognize when rules don't apply, and the irreplaceable capacity to care about the patient as a person rather than a probability distribution.

Getting this partnership right matters enormously. Healthcare faces a looming crisis of complexity: exponentially growing medical knowledge that no individual can master, increasingly personalized treatments requiring integration of genomic and clinical data, and chronic shortages of specialists in many fields. AI could address all these challenges - or it could create new ones if implemented poorly.

The path forward requires resisting two extremes. Blind AI boosterism that dismisses concerns about transparency creates dangerous overreliance on flawed systems. But reflexive AI skepticism that demands impossibly perfect explainability preserves human limitations while rejecting tools that could save lives. The hard middle ground - thoughtful deployment of transparent AI that augments rather than replaces human judgment - is where medicine needs to land.

We're witnessing the birth of a new diagnostic paradigm. Twenty years from now, doctors will likely look back at the pre-XAI era the way we now view medicine before imaging technology: it worked, but practitioners were navigating with far less information than necessary. The difference is that this revolution comes with an instruction manual - explanations that make the technology accessible rather than mysterious, collaborative rather than autonomous.

The doctor facing that flagged chest X-ray now has options. The AI doesn't just announce "cancer detected." It shows her the subtle ground-glass opacity in the left lower lobe, highlights the spiculated margins consistent with malignancy, and notes that the pattern resembles 487 confirmed cases in its training data. Armed with this explanation, she can evaluate whether the AI's reasoning makes medical sense, order appropriate follow-up tests, and explain to her patient why further investigation is warranted.

This is explainable AI's promise: not algorithms that think for us, but algorithms that think with us - their reasoning visible, their logic testable, their assistance transforming from threatening mystery into transparent collaboration. Whether that promise is realized or squandered depends on choices being made right now in hospitals, regulatory agencies, and research labs around the world. The black box is opening. What we do with what's inside will shape medicine's future.

Rotating detonation engines use continuous supersonic explosions to achieve 25% better fuel efficiency than conventional rockets. NASA, the Air Force, and private companies are now testing this breakthrough technology in flight, promising to dramatically reduce space launch costs and enable more ambitious missions.

Triclosan, found in many antibacterial products, is reactivated by gut bacteria and triggers inflammation, contributes to antibiotic resistance, and disrupts hormonal systems - but plain soap and water work just as effectively without the harm.

AI-powered cameras and LED systems are revolutionizing sea turtle conservation by enabling fishing nets to detect and release endangered species in real-time, achieving up to 90% bycatch reduction while maintaining profitable shrimp operations through technology that balances environmental protection with economic viability.

The pratfall effect shows that highly competent people become more likable after making small mistakes, but only if they've already proven their capability. Understanding when vulnerability helps versus hurts can transform how we connect with others.

Leafcutter ants have practiced sustainable agriculture for 50 million years, cultivating fungus crops through specialized worker castes, sophisticated waste management, and mutualistic relationships that offer lessons for human farming systems facing climate challenges.

Gig economy platforms systematically manipulate wage calculations through algorithmic time rounding, silently transferring billions from workers to corporations. While outdated labor laws permit this, European regulations and worker-led audits offer hope for transparency and fair compensation.

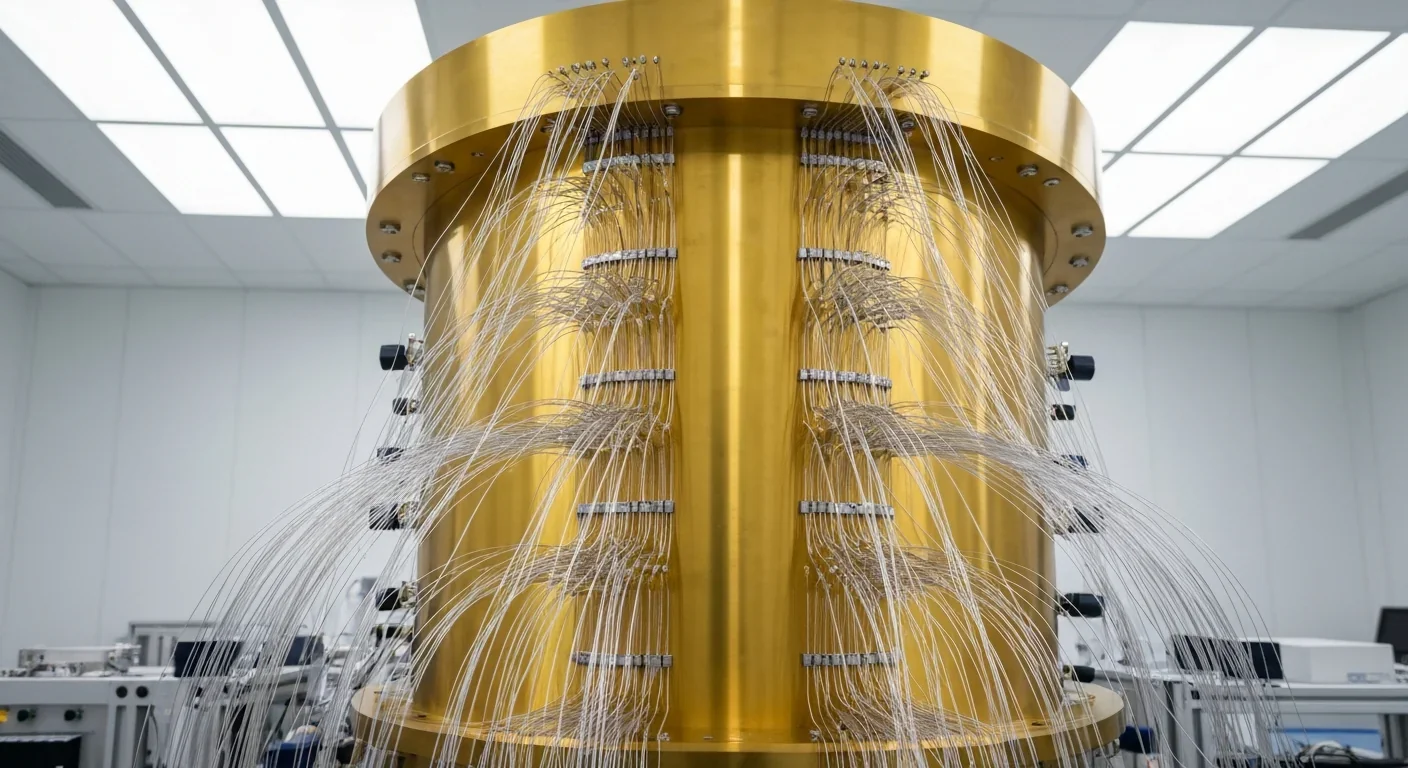

Quantum computers face a critical but overlooked challenge: classical control electronics must operate at 4 Kelvin to manage qubits effectively. This requirement creates engineering problems as complex as the quantum processors themselves, driving innovations in cryogenic semiconductor technology.